Advanced Use

Multiple URLs

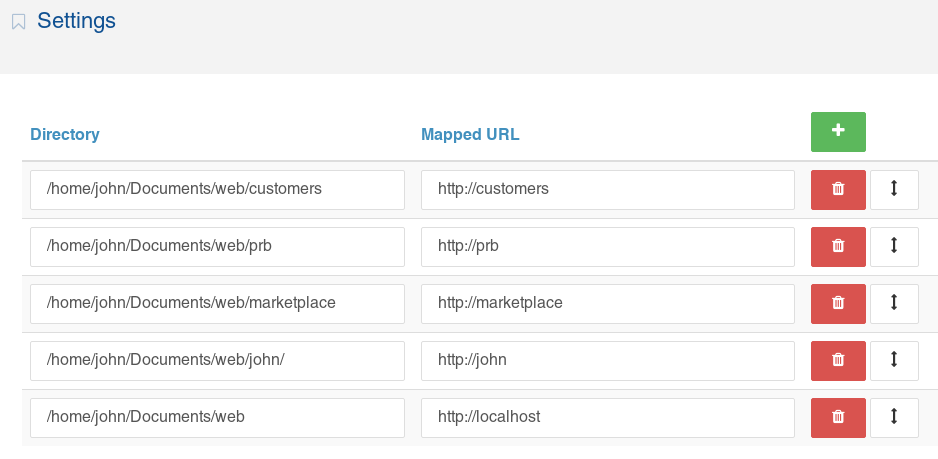

Perhaps (like me) you have your development system configured for a number of URL's in addition to the usual http://localhost. The SIte Sniffer settings page allows multiple URLs and associated directories to be entered so that sites found are appropriately categorised.

The directories are scanned in order of priority and any site found within more than one URL will be listed under the URL with the highest priority - closest to the top of the list.

If you find Site Sniffer is running into resource limitations when scanning for sites and returning 500 timeout errors, this feature can be used to make the searching more focussed and hence allow you to reduce the depth of searches.

Exclude Patterns

Exclude patterns can be used to prevent matching directories from being searched. Patterns should be entered one per line and may include system glob wildcards such as "*". For example, the pattern */old_sites/* will exclude all directories containing "/old_sites/" in the path from being searched.

Package Versions

Version 1.1 of Site Sniffer added reporting of package versions. This is disabled by default because it could be expensive processing. To report on package versions, tick the checkbox on the settings page. Each package listed will then be suffixed with the package version number.

CPU Resources

The searching conducted by Site Sniffer can be heavy on CPU resources and perhaps run into limitations and give 500 timeout errors. Where this happens depends very much on how powerful your development system is, how fast the disks are, how much memory you have and how your sites are spread about the system.

I have a shed-load of Concrete CMS sites on my development system dating back many years and have yet to actually run into such a limit. But in theory it could happen.

Should you run into resource limits, the first thing to do is to look critically at your settings and consider whether the search depth is unnecessarily large. Perhaps you could reduce it from 5 to 4 and get things back under control.

Next is to consider if there are any directory paths beneath your configured directories for Site Sniffer that just are not relevant, then exclude those directories with an exclude pattern as described above.

Also consider if you need package versions to be reported (added with v1.1). Scraping package versions is expensive processing, so disabling version reporting of package versions may help speed things up.

In the long term, if users of Site Sniffer find this becomes a problem, I will re-engineer it to spread the processing load. At present, I do not have evidence to suggest that is necessary.